Primer | Observational Studies

- Introduction

- Research question and hypothesis

- Knowledge gap

- Sampling

- Outcomes

- Exposure groups

- Confounding, biases, and errors

- Effect modification

- Mediators, colliders, and instrumental variables

- Essential elements of an observational study

Introduction

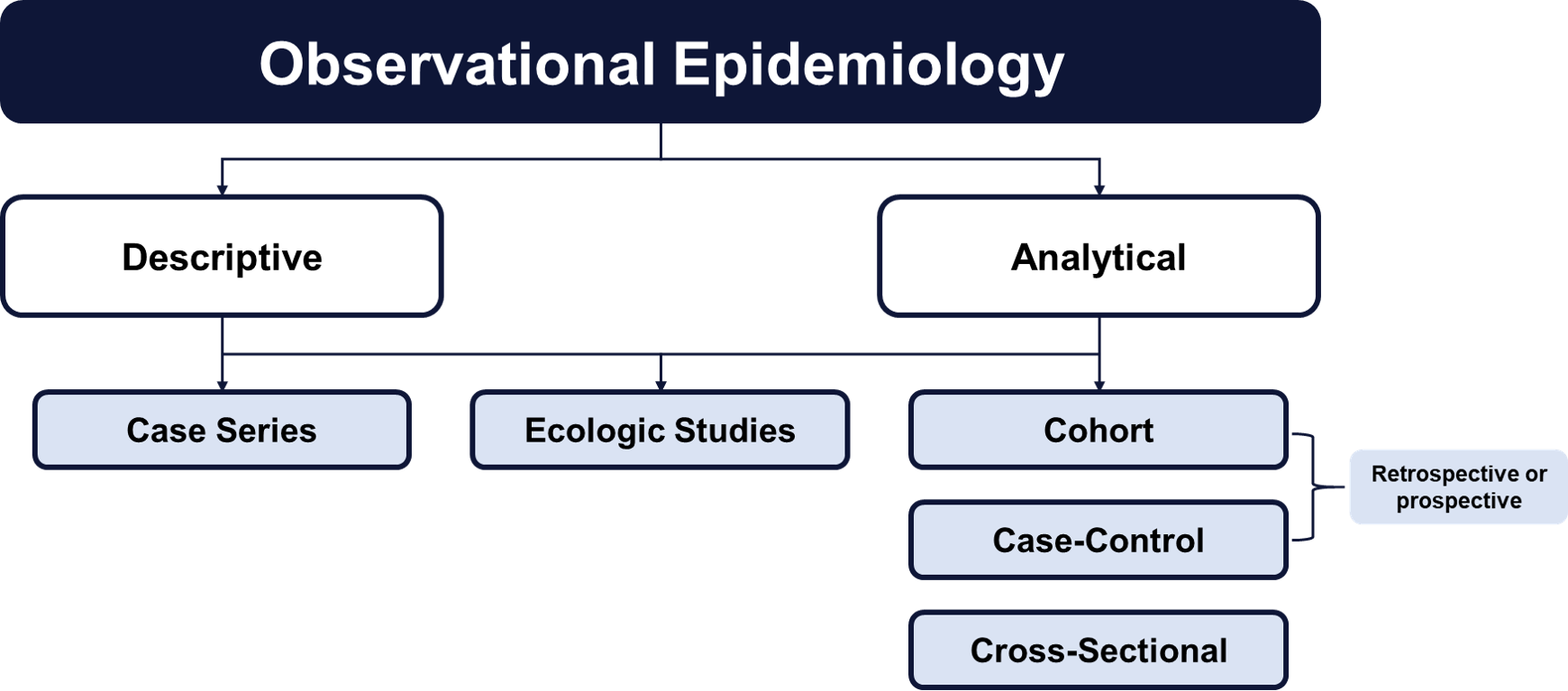

Observational studies may be considered the compliment to experimental studies (i.e., randomized controlled trials). Study designs falling into this category are observational because researchers simply observe the effect of a factor (or exposure) on an outcome. Because these designs do not directly intervene on exposure status and group assignment is not formally controlled, the assessment of cause and effect must be carefully considered.

Research Question and Hypothesis

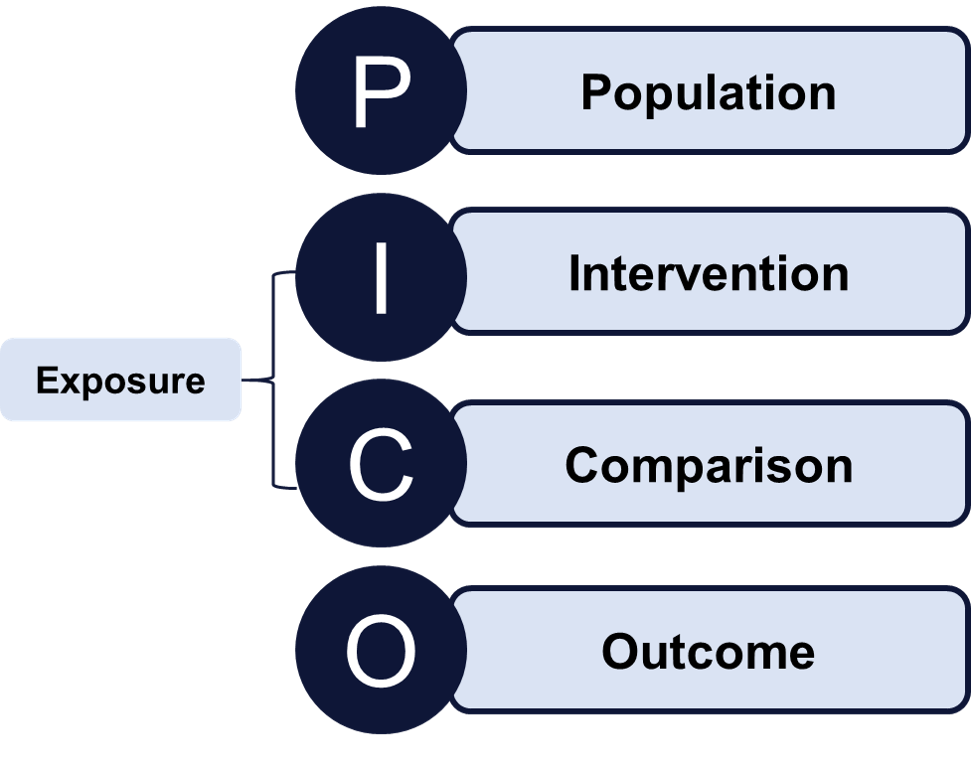

Developing the study’s research question and associated hypothesis is the cornerstone

of study design. The research question is a significant marker of study quality. It

forecasts the subsequent direction of data collection, analysis, interpretation, and

application. One way to effectively visualize and describe a research question is

by using the PICO format.

A clear question and hypothesis, at the outset, will lead to a clear objective that

is understandable and reproducible.

Knowledge Gap

The research question and objective should be developed to inform a gap in understanding a disease process, policy implication, or social determinant of health. While observational studies often inform literature gaps, they also provide the preliminary data necessary to reasonably confirm causality using experimental study designs. Considering the knowledge gap early on will not only impact the significance of study findings but will also inform the choice of covariates, effect modifiers, and confounders.

Sampling

The sampling strategy (i.e., eligibility criteria) determines the researchers’ and audience’s ability to generalize findings to similar populations. In other words, the sample’s characteristics identify the outer boundaries for interpreting study findings. Carefully choosing inclusion and exclusion criteria will minimize selection bias while allowing for robust adjustment and effect modification testing.

Outcomes

Appropriately identifying and defining an outcome – in both observational and experimental studies – is critical to the validity of findings and interpretation of results.

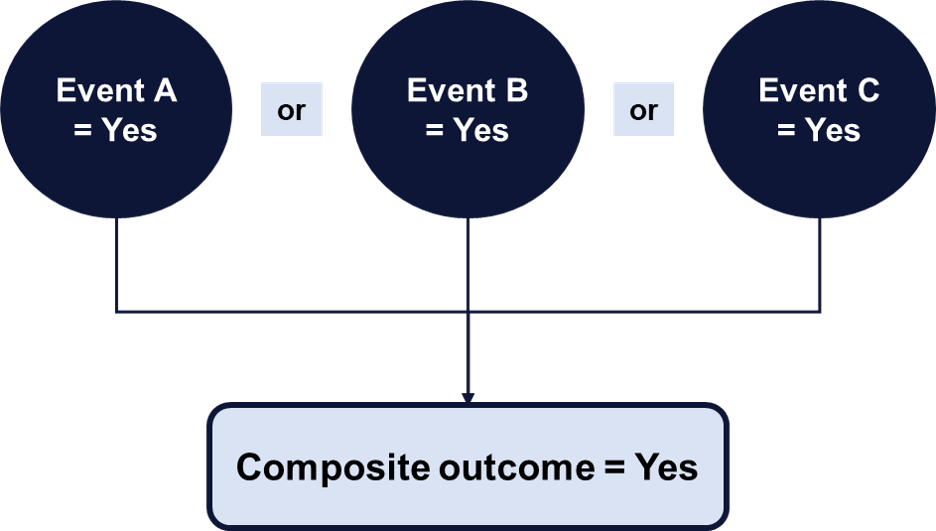

The incidence of the primary outcome, relative to the exposure, determines the sample size necessary to statistically, robustly identify between-group differences. The primary outcome may be a directly, measured single outcome or a composite of multiple events. Composite outcomes are uniquely useful as they increase statistical efficiency.

Secondary outcomes may be defined to supplement the understanding around the primary question. However, findings associated with secondary outcomes must be interpreted with caution as the study’s power (i.e., ability to detect statistically significant differences) is anchored on the primary outcome.

Choice of the primary and secondary outcomes should be based on the clinical and potential societal relevance of the study question. If these conditions of relevance are not met, applying and interpreting findings becomes a significant challenge.

Exposure Groups

As with defining the study outcome(s), defining the study exposure is a critical aspect of study design. Unlike experimental studies, where the exposure is often defined by the presence or absence of an intervention, incorrect or inadequate exposure definitions can lead to measurement and misclassification biases. Each study design is primarily built around a single exposure-outcome relationship. It is important to keep this association at the center of designing the study, the analysis phase, and interpretation.

Confounding, Biases, and Errors

Confounding

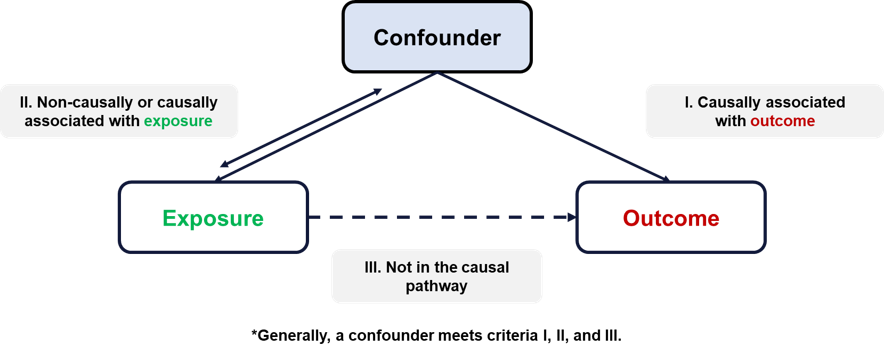

Because they are uncontrolled, non-experimental studies, observational designs are

susceptible to external factors that spuriously influence the primary exposure-outcome

relationship.

A familiar concept to consider when designing, applying, and interpreting observational research is confounding. In simple terms, confounding refers to the noncausal, real effect observed on an exposure-outcome relationship because of a third variable (or group of variables). Stratifying or adjusting for the confounder eliminates the significant association observed between the exposure and outcome, making it a false association. Confounders essentially create the illusion of a false association between exposure and outcome.

In randomized controlled trials, the effect of confounders is minimized because the randomly selected samples are expected to be comparable with respect to both known and unknown confounders.

Biases

Unlike a confounder, which is part of a real association between confounder and outcome,

biases are systematic errors in the design or conduct of the study. The effects of

a bias may result in untrue or erroneous associations. Biases are generally the result

of process errors within the design or application of a study. They may be classified

broadly into two groups:

- Selection bias refers to the different probabilities of being included in the study sample. An example of this bias would be surveillance bias.

- Information bias refers to systematic tendency for individuals to be incorrectly placed in different exposure/outcome categories. Examples of this bias include recall bias, misclassification bias, confounding by indication etc.

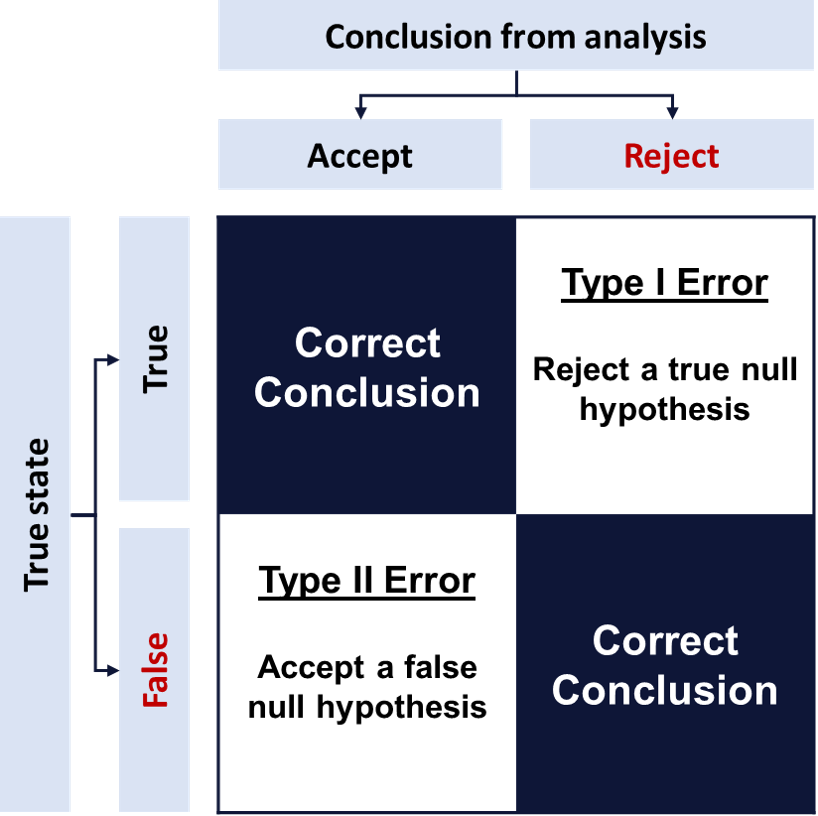

Errors

There is also a possibility that, given a range of statistical probabilities, the

hypothesis under study is incorrect in some way. These hypotheses-related errors are

called Type I and Type II errors. They are described in the context of a null hypothesis.

Consider an example where the null hypothesis is that smoking does not increase the risk of lung cancer. Here a type I error would be concluding that smoking does increase lung cancer risk when it does not increase risk. A type II error would be concluding that smoking does not increase lung cancer risk when it does increase risk.

Adjustments for type I and type II errors during the analysis phase are made using alpha and beta levels.

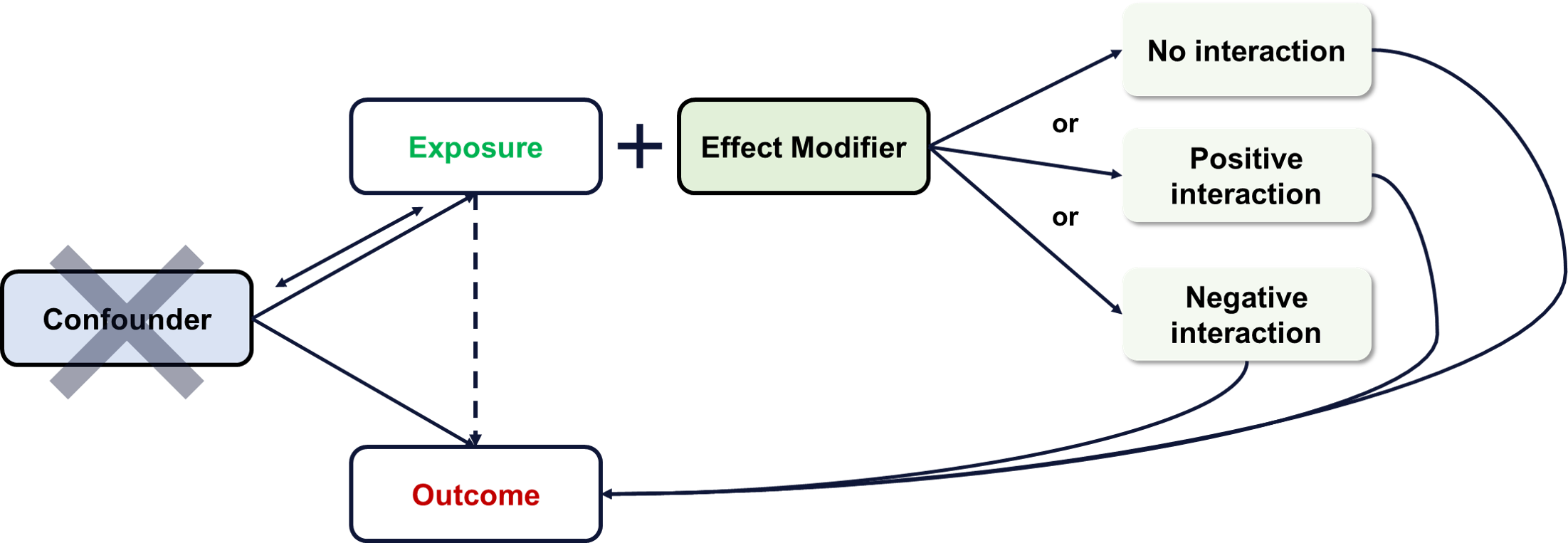

Effect Modification

Effect modification refers to the phenomenon where an exposure’s effect on an outcome is modified based on a third variable. Effect modification is different from confounding in that an effect modifier does not eliminate a significant exposure-outcome association but changes risk based on its levels.

An effect modifier does not explain the exposure-outcome relationship or create spurious associations. Rather, it works together with the exposure to highlight differences in the effect of the exposure on the outcome. In summary, an effect modifier describes separate additive/multiplicative effects with respect to the exposure and outcome association. Examining the role of effect modifiers on a given association often reveals clinically relevant nuances in risk interpretations and applications.

Mediators, Colliders, and Instrumental Variables

A mediator variable explains the causal mechanism through which an exposure affects an outcome. It acts as an intermediary that carries the effect of the exposure on an outcome.

A collider variable is associated with both the exposure and outcome, but is not a confounder. Both the exposure and the outcome are dependent on the value of a collider and therefore, conditioning on it may lead to selection bias (where certain collider values are more likely to be included in the sample). Propensity score matching is one way to control for the effects of colliders.

An instrumental variable is a third variable that is used to estimate and control for confounding effects on a particular exposure-outcome association.

Essential Elements of an Observational Study

- Develop and refine the research question.

- Carefully consider population characteristics.

- Rigorously define outcome(s) and exposure.

- Specify expected confounders, a priori.

- Estimate the sample size necessary to identify significant differences. If sample size is not adjustable, estimate the power available to detect differences.

- Standardize data collection.

- Manage and analyze data appropriately.

- Cautiously consider limitations of the study and only conclude what is supported within these limitations.